From Invention to Innovation: The Real Pace of Agentic AI Adoption

By Gökçe Ceylan, Principal at Oxx

In a world that constantly talks about things changing faster than ever, I’ll take a slightly controversial approach and focus on what doesn’t change overnight—or even over a few years.

I’m drawn to things that move slowly, that take time, and that endure despite rapid tech cycles. Why? Often, ‘invention’ and ‘innovation’ are conflated, whereas there is a subtle but very important distinction between the two.

An invention (can we build it?) is tied to the speed of tech cycles, whereas an innovation (will people adopt it?) is tied to human behaviour.

For context, a study of how 15 major technologies spread across 166 countries over 200 years showed adoption lags (time between invention and adoption) of on average 45 years! Cell phones were invented in 1973 and took on average 15 years to be adopted; the internet was invented in 1983 and took on average 8 years to be adopted. Newer technologies are getting adopted faster than old ones, but it’s not to say the lag has diminished to zero.

The breakthrough invention of today is AI agents - a new capability with the potential to completely change workplace dynamics. These systems combine autonomy, adaptability, and reasoning to automate complex workflows, enhance decision-making, and redefine collaboration models. They will quite literally define the next generation of work.

But the question isn’t if agents will complete tasks on behalf of humans - they will. It’s when. A few months? A year? A decade?

Given agents’ superb capabilities, it’s no surprise that the thesis “agents will fully automate task X in 6–12 months’ time” has become a common view in the world of B2B software. Due to this shared view, both investors and founders feel the pressure: if they don’t act now, they’ll be late to the market and watch this ‘agentic’ space get claimed by someone else. The idea of first-mover advantage feels more tangible today than it has in decades.

A question I often pose for structuring decisions under uncertainty is “what needs to be true for this statement to be true?” So, given the uncertainty around adoption cycles, let’s try to answer, what needs to be true for agents to fully automate a certain task across organisations in 6 months’ time?

Agents will fully automate task X in 6-12 months’ time if:

Agents can perform task X end-to-end, which refers to technical feasibility

Agents deliver compelling ROI vs current humans + tooling models, which refers to commercial viability

Organisations want and are ready to adopt agentic automation, which refers to demand

The first two criteria are relatively easy to demonstrate: technical feasibility and the potential for agents to deliver massive ROI are precisely the factors that fuel the excitement—and the urgency—to seize first‑mover advantage.

So, let’s focus on demand. What needs to be true for organisations to be ready and willing to adopt agentic automation?

In my view, the following five conditions should hold true:

‘Demand’ in the classic sense for B2B software: a real pain point that compels action

As with any B2B SaaS purchase, demand comes from a real problem worth solving. The way task X is currently done must be painful enough that users, buyers, and stakeholders care about fixing it. And that pain must be important enough for the organisation to commit the necessary resources – time, money, and people – to make the transition. Without this combination of pain and willingness to invest, no new solution—AI agents or otherwise—will gain traction.

Organisations understand AI agents well enough to see them as the best solution

It isn’t enough for organisations to simply know about AI agents. They must understand them well enough to conclude that they are the best (or at least a strong alternative) solution for the problem at hand. This level of understanding elevates adoption from experimentation to intentional problem-solving.

Multi-sided trust that spans agents, systems, and employees

Trust is the cornerstone of adoption, and it operates on four fronts:

Trust in Agents’ Reliability: Agents won’t be given meaningful work unless they are seen as accurate, consistent, and dependable. This perception forms the baseline before anyone is willing to delegate.

Trust in the System’s Process: It’s one thing to complete a one-off project, and a completely different beast to set up a well-functioning and scalable process. And, trust doesn’t mean micromanaging every step in a process – it means trusting the system to execute correctly or surface issues when they occur. Transparency, auditability, and a proven track record build this new kind of trust.

Trust in Employees’ Own Ability: Employees need enough “AI literacy” to feel confident working with autonomous systems. They must understand how agents operate conceptually, know when to delegate and when to intervene, and be capable of asking good questions or debugging issues. Without this, organisations risk misuse (overtrust or undertrust) or outright resistance.

Trust in Cybersecurity Protections: With great power comes great responsibility. AI agents often have far broader access and action rights than traditional B2B SaaS systems, which raises the stakes on security. Companies need confidence that these agents won’t expose them to new risks. As SOCRadar’s whitepaper on MCP Servers highlights, the growing attack surface created by autonomous systems makes robust cybersecurity assurances non-negotiable. Until organizations are comfortable that their data, infrastructure, and operations remain secure, adoption will lag.

According to a recent study by KPMG, “58 percent of people view AI systems as trustworthy. People have more faith in the technical ability of AI systems to provide accurate and reliable output and services (65%) than in their safety, security, impact on people, and ethical soundness (e.g. that they are fair, do no harm, and uphold privacy rights; 52%).”

4. Redefinition of roles, responsibilities, and career paths in a world where agents take on repeatable tasks

As AI takes over repeatable tasks, organisations must rethink what humans are responsible for. Real productivity gains don’t come from inserting AI into old manual processes. They come from reimagining workflows altogether (e.g. the shift from a horse carriage to a car per se). Employees need to feel that agents respect and augment their roles rather than replace them. This openness to redesigning how work gets done is critical to unlocking AI’s true potential.

This includes clarifying roles, responsibilities, and team composition, while also redefining career progression. For example, the person who configures or manages AI agents may become as valuable as today’s manual specialists. Without this clarity, adoption risks stalling due to fear, resistance, or political gridlock.

5. Alignment and buy-in from organisational stakeholders and gatekeepers

Finally, broad organisational buy-in is essential. Key decision-makers – across IT, compliance, operations, procurement, and legal – must support the shift. If these gatekeepers don’t trust AI to be safe, compliant, and aligned with business goals, implementation won’t move forward, no matter how excited end users are.

So the question becomes how quickly will these conditions hold?

I appreciate most of these are not binary conditions; they’re spectrums. And early adopters will operate under partial trust, limited literacy, and evolving workflows. But it should raise the question, what is the minimum viable trust or good enough AI literacy that is sufficient to trigger early adoption in my vertical? And how quickly will that be achieved?

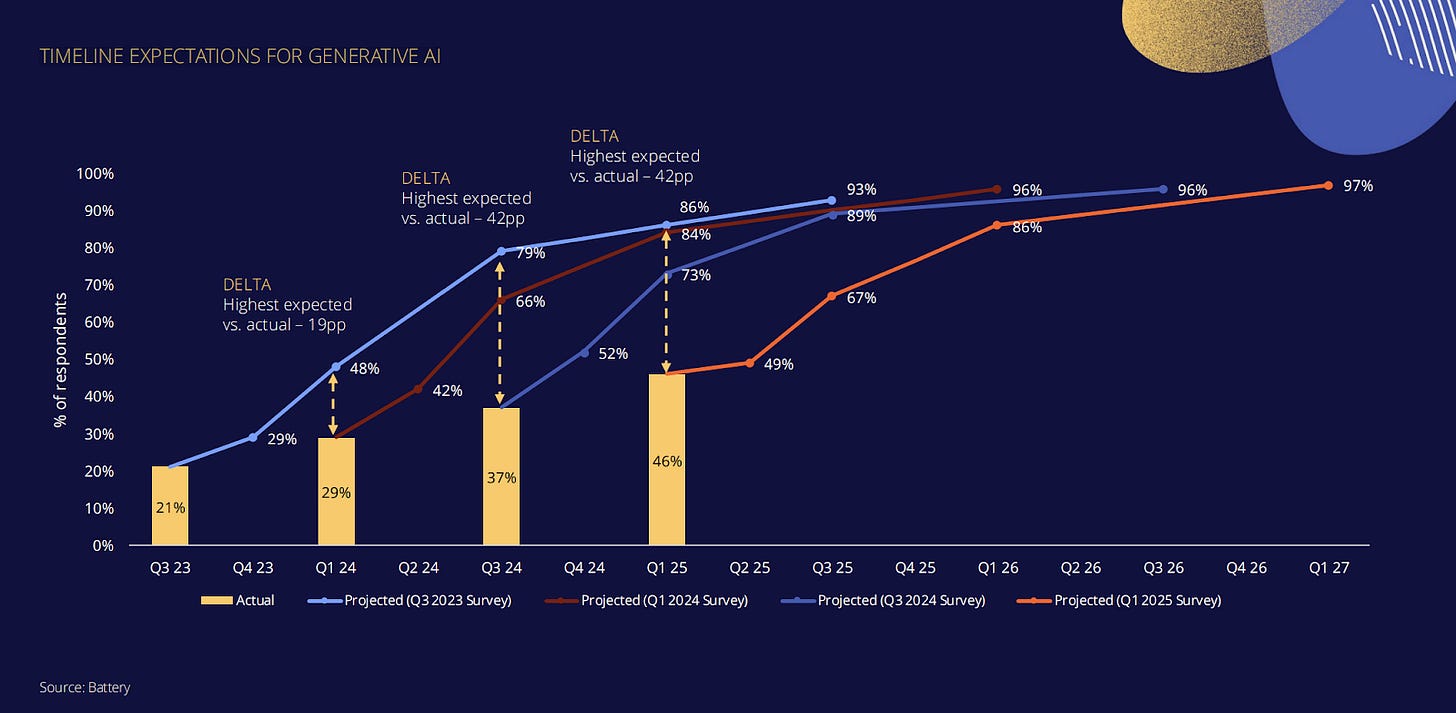

A good reference point comes from Battery’s 2024 Open Cloud Report, which shows a consistent gap between projected and actual AI deployment in production. While expectations were very high (e.g., 79% projected vs. 37% actual in Q2 2024), actual adoption has lagged significantly.

You might wonder, why is this gap?

Think of it this way – tech cycles are accelerating, but generational cycles remain remarkably stable, typically spanning 15 to 20 years. Why? Because generations are shaped not just by the tools they use, but by the cultural and behavioural norms adopted in their formative years. And those norms, like how people learn, work, build trust, and make decisions take time to evolve, even in a world of exponential tech acceleration. We may be able to build and deploy agents quickly, but getting humans and institutions to absorb and adapt to them is a longer game.

Of course, it’s a no-brainer that ‘work’ won’t look as we know it today ten years from now. But there are meaningful cultural, organisational, and even physiological hurdles to overcome before we see agents fully automating workflows across the board in the next 6–12 months. These friction points may be subtle and unspoken, but they are real, and for anyone chasing first-mover advantage, especially in European enterprise markets, it’s worth proactively addressing them.

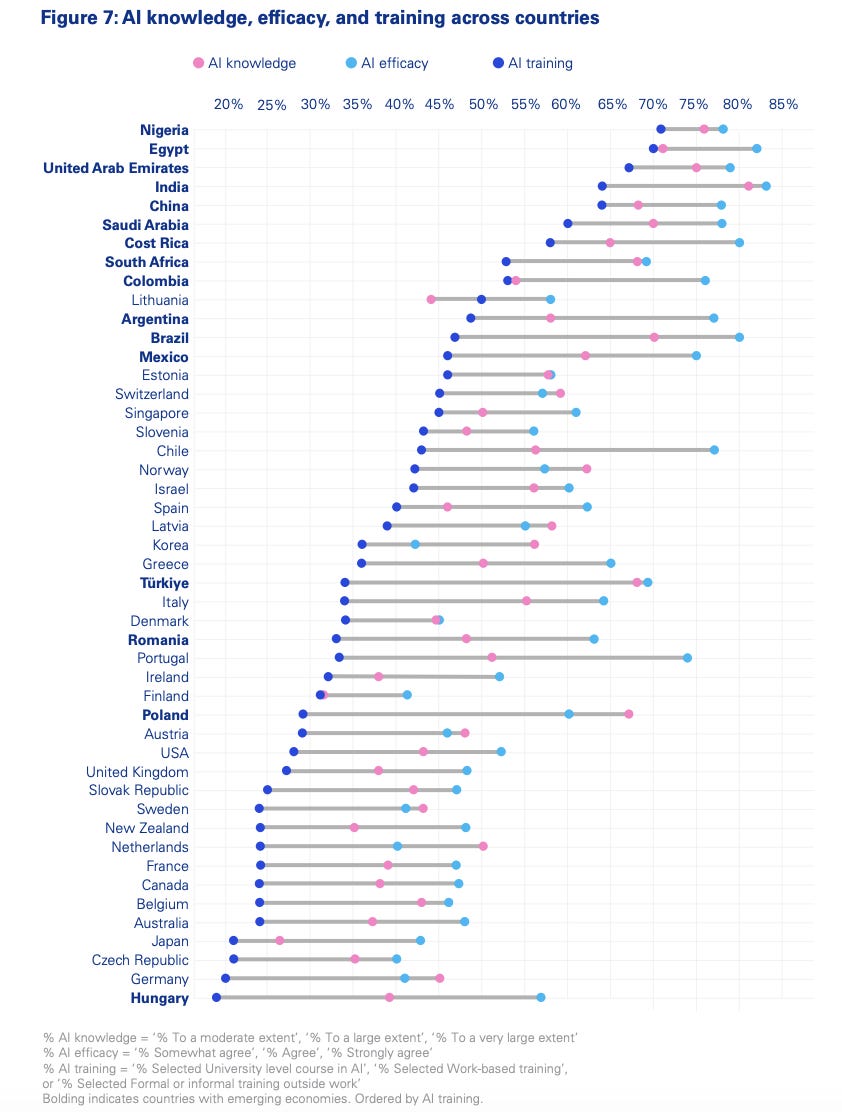

One last thought: it’s important to recognise that AI adoption won’t unfold at the same pace everywhere. Different countries and industries face vastly different regulatory environments and cultural readiness levels. What’s acceptable in a tech-forward U.S. startup might take years to be permitted in a European healthcare system. Fine-tuning your ideal customer profile (ICP) with these factors in mind could make the difference between early traction and a long, expensive wait for the market to catch up.

And, be prepared to be incredibly off in your initial ICP assumptions… The aforementioned KPMG report highlights that AI training, knowledge, and efficacy are lowest in the advanced economies, and highest in emerging markets.

This is not a call to slow down in any means, but a note to draw attention to where it might be worth investing early effort: onboarding, user education, built-in transparency and explainability in product design, change management, and ROI clarity. If anything, this is a call to build deep, not just fast, as a way to turn ‘invention’ into ‘innovation’ and deliver real, long term value.